Is your test data management strategy driving your organization forward, or holding it back?

Discover how four critical areas — speed, quality, compliance, and cost — are impacted by inadequate test data management. Explore how the right approach can address these challenges and find key questions to evaluate your organization’s current practices.

Enterprises are under immense pressure to innovate quickly, deliver software more frequently, and meet growing customer demands. Yet, too often, organizations rely on disparate, outdated tools to manage test data, creating bottlenecks that slow down digital transformation and negatively affect the developer experience. Test data management is so often a roadblock at the very moment when speed, security, and quality are paramount.

Many enterprises struggle to modernize their approach to test data management, preventing them from achieving true DevOps agility. Legacy solutions often fail to deliver production-quality, compliant test data fast enough to support accelerated application delivery. Worse, manual processes introduce inefficiencies and increase risks, leaving sensitive data exposed and compliance requirements unmet.

To keep up with DevOps demands, enterprises need to take a modernized approach, one centered on data virtualization and data masking. It combines the best aspects of traditional approaches without sacrificing speed, quality, or compliance. It lets you eliminate data bottlenecks, improve software release cadence, and reduce operational costs, paving the way for faster, future-proof innovation.

In this eBook, we will explain four key areas — speed, quality, compliance, and cost — that your test data management strategy and solutions can either hinder or accelerate. We will explore the challenges that inadequate test data management can pose to each area, review how the right strategy can solve them, and offer a checklist of what to ask when examining your organization’s current approach to test data management.

Comparing Test Data Management Approaches for Enterprise DevOps

The goal of an effective test data management strategy is to ensure development and testing teams have access to accurate, compliant, and production-like data. It’s the only way to accelerate application releases, improve quality, and meet stringent security mandates.

To start, let’s look at four traditional approaches teams use to do this.

Shared Production Data Copies

Using production data copies for testing offers broad coverage by replicating real-world scenarios, including edge cases. This approach closely mirrors live environments, making it highly effective for thorough validation.

However, in enterprise DevOps, this approach presents significant challenges. While it enhances test coverage, having to request test data slows down delivery pipelines, and creating multiple copies of entire datasets drives up infrastructure expenses exponentially. It also poses a serious risk of exposing sensitive information, potentially leading to non-compliance with regulations like GDPR, CCPA, and HIPAA — even if safeguards like encryption are used.

Additionally, coordinating access to shared production data across large teams is complex and cumbersome. These factors make this approach less agile and ill-suited for enterprise development workflows.

Subsetting Production Data

Subsetting involves creating smaller, focused datasets by extracting portions of full production data copies. This approach is often used to save on storage costs while providing manageable test data for development and testing purposes.

While subsetting reduces hardware, CPU, and licensing costs, it has significant drawbacks. Because the data is so limited, it often excludes edge cases, which can lead to defects in production. And it can also pose major compliance risks if sensitive data isn’t properly masked.

Synthetic Data

Synthetic data is artificially generated to replicate real-world data while safeguarding sensitive information. It is commonly used for targeted applications like testing features or developing greenfield projects, offering a controlled and compliant alternative to real data. Enterprises often combine synthetic data generation with other approaches, especially for early-stage testing cycles.

See how synthetic data is created >> AI Synthetic Data Demo

Standalone Data Masking

Masked data provides a way to transform sensitive production data into de-identified, compliant values. For many teams, it’s an optimal way to deliver highly-realistic datasets for development and testing without exposing private information.

However, standalone or manual masking is not scalable for enterprises. Traditional methods often depend on slow, errorprone processes like custom masking scripts — which itself requires specialized programming expertise and lengthy consulting engagements.

Other times, teams may rely on the basic masking capabilities provided by their database vendors. These tools only work for the specific vendor, making them impractical for enterprises using multiple types of databases.

Standalone masking also makes it difficult to maintain referential integrity, meaning linked data relationships across tables or sources break. For example, a data field might be masked with one value (Jones becomes Robins) in one location and a different value (Jones becomes Redmond) elsewhere. Referential integrity is crucial for downstream operations, especially integration testing, where seamless data flow across systems is essential.

It is incredibly difficult to maintain referential integrity when a team is relying on custom scripts, and you can’t get referential integrity across multiple, heterogeneous sources if your masking tool only works for a single source.

| Test Data Type | Speed | Quality | Compliance | Cost & Efficiency |

|---|---|---|---|---|

| Production Data Copy | Slow, manual delivery | Good test coverage | Sensitive data at risk | High consumption of storage, CPU, and licenses |

| Subset of Production Data | Slow manual delivery, especially in DevOps terms | Missed test case outliers | Sensitive data at risk | Some storage, CPU, and license savings |

| Synthetic Data | Delivery and sharing is still a manual process | Limited to a small subset of test requirements | Data de-identification not required | Depends on volume of data generated |

| Standalone Masking | Masked data still requires manual delivery | Masked data is production-like in quality | Compliant data, no sensitive data risk | High consumption of stage, CPU, and licenses |

Get more insights into masking >> Data Masking Methods & Techniques: The Complete Guide

A Modern Approach: Data Virtualization + Masking

An approach that incorporates data masking and virtualization is optimal for enterprise teams looking to scale and streamline test data management.

By combining virtualized, production-like test data copies with automated sensitive data masking, this method ensures test environments are lightweight, secure, and closely resemble production systems. It supports rapid, efficient software development while enabling faster provisioning, improved compliance with regulations like GDPR, and significant storage cost reductions.

| Test Data Type | Speed | Quality | Compliance | Cost & Efficiency |

|---|---|---|---|---|

| Data Virtualization + Masking | Automated, API-centric | Good test coverage | Compliant data, no sensitive data risk | Significant storage savings |

Now that we have the basics covered, let’s explore in more detail each of these four key areas that the right test data management strategy can improve for your enterprise: speed, quality, compliance, and cost/efficiency. We will review:

- Common challenges teams face in each area.

- How data virtualization + masking can solve them.

- A checklist of questions to ask when assessing your current strategy.

Development Speed

The Problem: Data Delivery Delays

Making a copy of production data available to a downstream testing environment is often a time-consuming, labor-intensive process that involves multiple handoffs between teams. The end-to-end process usually lags demand, and at a typical IT organization, delivering a new copy of production data to a non-production environment takes weeks or even months.

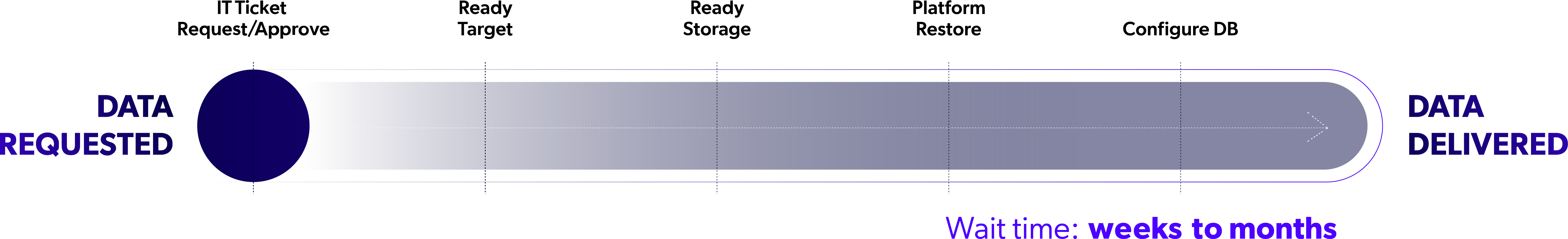

Here’s an example of the stages of a typical ticket-driven, manual system for requesting test data, to illustrate why it slows teams down so much:

With a manual system like this, developers may spend the majority of their time waiting for the test data they need and a far smaller portion of their time actually writing code.

The Solution: Automation & APIs

Enterprises looking for a test data management solution to support their DevOps initiatives must adopt a solution that streamlines test data delivery. It must create a path toward fast and repeatable data delivery to unlimited downstream teams and environments, from multicloud and multi-generational data sources.

DevOps teams need solutions with modern APIs that automate the delivery and management of ephemeral datasets. These solutions should seamlessly integrate test data with DevOps toolchains, enabling smooth inclusion in the CI/CD pipeline.

Development Speed Checklist

Here are some questions to ask when evaluating how well your current test data management strategy and solutions support development speed:

- Is your data delivery process manual, or automated with APIs?

- Can you instantly spin up an unlimited number of new, ephemeral test data environments?

- Can you continuously sync test data to production data?

- Can test data delivery be automatically integrated with DevOps toolchains — with tools like Jenkins, Terraform, and ServiceNow?

- Can data distribution be integrated with existing QA workflows?

Application Quality

The Problem: Low Data Quality & Availability

Delivering high-quality data for development and QA comes with significant challenges. Data quality is often judged by the number of data-related defects uncovered during the software development lifecycle. Stale or outdated production data in application development and QA environments can hinder software quality goals.

If QA teams lack timely access to production-quality data, defects may increase, debugging takes longer, and project releases are delayed. Competing for limited data environments forces testers and developers to context switch, slowing progress and introducing avoidable errors.

And without the right data, false positives and negatives arise, pushing errors later into the pipeline or even into production due to the unavailability of accurate data during early development phases.

The Solution: Shift Left with Self-Service Data

To ensure high application quality, organizations must adopt a “shift left” approach, addressing issues early in the software development lifecycle by giving developers and testers instant access to their own data environments. These dedicated environments should include multiple datasets and enable programmatic refreshes via API. Doing so ensures they have access to the latest production-quality data when they need it.

By integrating these capabilities into software development lifecycle workflows, teams gain API-centric controls to:

- Curate data libraries and easily access test data copies.

- Branch, bookmark, share, teardown, and refresh data.

- Restore datasets to specific points in time.

- Instantly rewind data after destructive testing.

- This proactive approach reduces defects, accelerates releases, and enhances overall software quality.

Application Quality Checklist

Here are some questions to ask when evaluating how well your current test data management strategy and solutions support application quality:

- Can developers and testers use modern APIs to instantly provision and refresh to complete, production-like data?

- Can you automatically deliver an unlimited number of ephemeral datasets?

- Can developers and testers instantly rewind after destructive testing?

- Can developers and testers quickly share their data environments with each other during the test cycle?

- Can developers and testers bookmark their data environments and create their own test data catalog?

- Can you provision from multiple data sources to the same point in time? Or do a simultaneous reset to a single point in time for a federated system integration testing?

Data Compliance

The Problem: Balancing Data Privacy & Speed

Discovering and masking sensitive data in development and testing environments, such as those handling credit card numbers or patient records, is critical for regulatory compliance with laws like GDPR, CCPA, and HIPAA. A data breach can cost millions in remediation, customer loss, and brand damage.

While masking sensitive data is the optimal way to safely take advantage of full datasets, it can increase operational overhead, slow development, and harm application quality if not done properly. Traditional end-to-end masking takes days or weeks, delaying test cycles. Integrating masking into test data management workflows without sacrificing speed and simplicity remains a significant challenge for organizations.

The Solution: Automated Masking Integrated with Data Virtualization

Organizations need a solution that is easy to use, fast to deploy, and scalable across the enterprise.

Policies should identify sensitive data at a granular level, locate it, and prescribe specific masking algorithms. Automating this process allows continuous identification, masking, and delivery of data. Masking ensures realistic test data while maintaining integrity across sources. Teams can monitor privacy risks as data changes and apply masking when needed, ensuring fresh, masked copies are always available.

Easy, automated masking speeds up the process of creating masked data. And integrating masking with data virtualization streamlines the process of putting that data into the hands of development teams.

These measures prevent sensitive data from moving unprotected across teams. Application development and QA teams can confidently use non-sensitive test data, meeting compliance requirements while safeguarding the organization.

Data Compliance Checklist

Here are some questions to ask when evaluating how well your current test data management strategy and solutions support data compliance:

- Is the process of finding sensitive data automated?

- Is masking algorithm-driven?

- How long does it take to mask the data and then deliver it to all downstream teams?

- Is the masked data realistic and is referential integrity preserved?

- Do you need separate solutions to mask and deliver the data?

- Do you need to provide data to external third parties on-site or off-site across geographies?

Cost and Efficiency

The Problem: Redundant Test Data & Inefficient Cloud Usage

To meet overlapping demands, operations teams must coordinate test data across multiple groups, applications, and release versions. In doing this, IT organizations often create redundant copies of test data, driving up both storage and cloud compute costs. To meet overlapping demands, operations teams must coordinate test data across multiple groups, applications, and release versions.

With up to 80% of data being redundant, shared datasets across test, development, reporting, and production environments further inflate costs. Additionally, underutilized cloud resources and idle environments exacerbate cloud expenses, making budgeting unpredictable and wasteful.

The Solution: Streamlined Data Delivery & Ephemeral Environments

By adopting advanced data delivery models and ephemeral environments, organizations can cut costs and improve efficiency. A virtual master copy of production data can sync in real-time, delivering space-efficient, on-demand data to downstream teams. Ephemeral environments, which spin up only when needed and deallocate afterward, maximize cloud utilization and eliminate waste.

Together, these approaches reduce storage needs, optimize cloud resource allocation, and lower cloud bills, enabling faster development cycles and significant cost savings.

Cost and Efficiency Checklist

Here are some questions to ask when evaluating how cost-efficient your current test data management strategy and solutions are:

- How many test data copies do you need to maintain for every production data source?

- Can you deliver an unlimited number of production quality test datasets while keeping storage consumption low?

- How much time does the team spend on provisioning these copies?

- How often are your test environments idle?

- Can you provision and tear down ephemeral test data environments?

- Are you able to use modern cloud object stores and caching methods to further reduce the space utilization?

Gartner® and Peer Insights™ are trademarks of Gartner, Inc. and/or its affiliates. All rights reserved. Gartner Peer Insights content consists of the opinions of individual end users based on their own experiences, and should not be construed as statements of fact, nor do they represent the views of Gartner or its affiliates. Gartner does not endorse any vendor, product or service depicted in this content nor makes any warranties, expressed or implied, with respect to this content, about its accuracy or completeness, including any warranties of merchantability or fitness for a particular purpose.

Scale Up DevOps With Next-Generation Test Data Management from Perforce Delphix

Building a successful DevOps pipeline at enterprise scale requires a modern test data management solution. Perforce Delphix delivers automated, compliant test data to accelerate DevOps, improve quality, and reduce risk. By virtualizing and masking data at scale, Delphix empowers teams to move fast and efficiently while meeting the strictest security and privacy mandates.

Here’s how Delphix supports your enterprise:

Accelerate Development Velocity

Time is critical in development, and delays cost more than just productivity. With Delphix, you can provision test data 100x faster and refresh in minutes for faster releases. Developers get self-service access to test data with the ability to refresh, bookmark, rewind, and branch data instantly, without administrative intervention. In fact, IDC Research found that Delphix users developed applications 58% faster.*

Reduce bottlenecks and empower your teams with instant access to production-quality data.

Improve Application Quality

High-quality applications demand high-quality test data — and 31% of organizations cite poor quality data as a major challenge, according to Perforce’s State of Continuous Testing Report.

Delphix ensures developers and testers can work with complete, consistent, and up-to-date datasets, minimizing defects. By offering automated provisioning and refresh capabilities, Delphix supports shift-left testing and accelerates release cycles.

Ensure Data Privacy & Security

Compliance doesn’t have to slow you down. Delphix automates sensitive data discovery and data masking, ensuring compliance with regulations like GDPR, HIPAA, and CCPA across all environments. Built-in policy enforcement preserves referential integrity, giving you peace of mind while protecting your enterprise from costly fines. And with Delphix, 77% more data and data environments are masked and protected.*

Cut Costs, Improve Efficiency

Reduce operating costs with Delphix’s space-saving virtualization technology. Eliminate redundant storage expenses and leverage ephemeral test environments to optimize infrastructure. Save up to 80% on storage costs while allocating resources to drive innovation.

And realize a fast return on your investment: IDC Research also found that Delphix users experienced a 408% 3-year ROI, including $8.4 million in additional revenue from improved software development productivity.*

Further Reading >> What is Delphix?

See Delphix in Action

Future-proof your DevOps pipeline with Perforce Delphix. Request a test data management demo to see for yourself how we accelerate innovation.