Blog

August 24, 2020

Over the last decade, test management systems have largely remained unchanged. We have seen advancement by way of test automation, and shifting testing closer to development (shift left testing). But they have not yet embraced the power that AI will bring to this important part of the software development lifecycle. AI can help us understand the relationship between code, tests, and issues to help reduce errors pushing into production systems.

Here we will discuss how AI and ML can be leveraged to improve test management, quality, and the impact of changes from design into production.

Specific Activities Benefiting from AI Testing and Machine Learning in Software Testing

To explain how AI and ML in test management are evolving, let us first briefly cover what test management is.

Test management refers to the activity of managing the testing process. This includes both manual and automated testing activities. A test management tool is software used to manage these test artifacts (manual or automated) that have been specified by a test procedure.

There are two main parts to the test management process:

- Planning

- Requirement and risk analysis

- Test planning and design

- Test organization

- Execution

- Test monitoring and control

- Issue management

- Test report and evaluation

We will focus on how AI and ML can leverage the two distinct areas of planning and execution.

Back to top

AI and ML During the Planning Process

Planning consists of requirements and risk analysis as well as test planning, design, and organization. These activities are largely coordinated inside of a database or database-driven tool that eventually lead to some sort of relationship between artifacts.

For example, you can have a set of product requirements that need a corresponding test (one or many) to ensure you have full test coverage. Most, if not all, of these activities are done manually. This is error prone and requires a lot of manual resources that could be spent on more valuable tasks.

Let’s have a look at what AI and ML can bring to the table.

Requirements/User Story Quality Analysis and Profiling

Engineering teams understand that requirements management is critical to the success of any project. Poorly-defined requirements result in project delays, cost overruns, and poor product quality.

Applying AI to the requirements analysis phase can reduce the total design and review time. But more importantly, it can help reduce poorly-defined requirements — these account for more than half of all engineering errors (bugs/issues). Eliminating subsequent rework will also help to reduce the product development cost. The cost of correcting errors increases exponentially as a project progresses.

Using AI, we can also isolate requirement issues before they are sent for manual human review and receive suggestions for improvements during the design cycle.

Test Planning and Design

The quality of test cases can vary greatly by teams — based on the experience, skill set, and background of each team member. Teams today create tests based on requirements, user stories (acceptance criteria), or if nothing exists, they focus on areas that suffer from constant quality issues and bug reports.

Many teams will add to their test cases by looking at issues that are found during the testing cycle or reported when the system is in production. This is a very reactive approach.

Applying AI and ML to automatically generate the tests can help teams increase their test coverage, but more importantly, have the right tests to verify and validate the system under test.

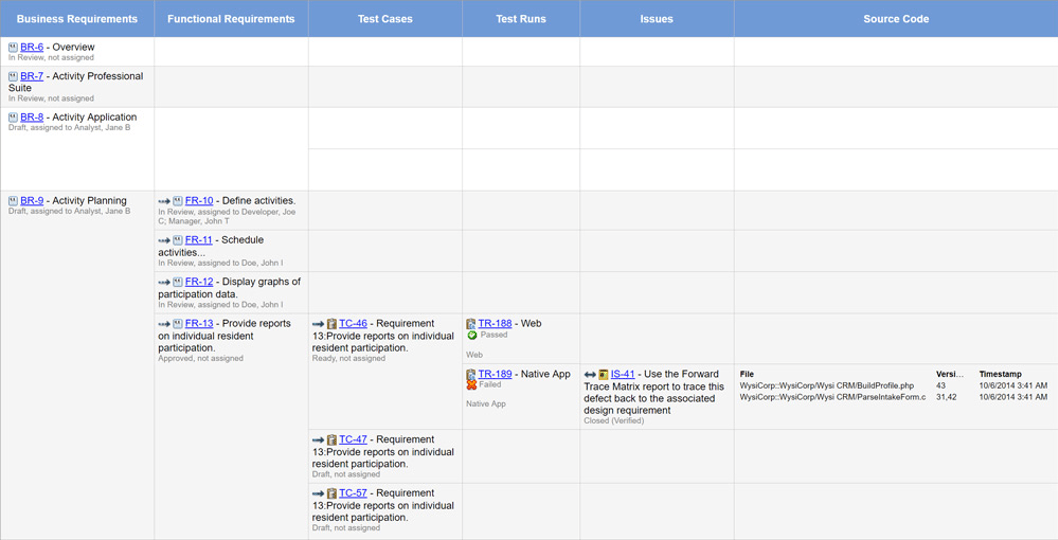

Today, the best way to understand if you have good test coverage is by using a trace matrix. The trace matrix provides an easy and fast way to see of you have a corresponding test for each requirement and if that requirement changes what the impact of such a change would be (impact analysis).

AI and ML can be applied to these systems to understand what test coverage is required. It can also flag any missing tests or inadequate tests based on existing data models. AI is increasingly used in test automation, and it can in turn evaluate the “area” or “component” it is testing and create the relationship between the automated tests and requirement as shown above.

Applying AI and ML to automatically generate tests (manual or automated) will reduce escaped defects, as there is a higher level of test coverage over manual test case generation. Teams can focus on more complex parts of their product that require more testing and let ML create or suggest tests based on the relationships between items. ML can also identify and predict when a change occurs and which tests you need to run first to catch bugs faster.

Back to topGet end-to-end traceability and forward/backward impact analysis in Perforce ALM.

AI and ML During Test Execution

Traditional test management systems rely on users to input results as they flow back from either manual testing activities or test automation systems. Automated testing solutions can push execution results back into the test management tool, but the large volume of results often cause these systems to become a “dumping ground” with large sets of data that no one analyzes. Or, it takes days to understand what failed and more importantly, why.

Understanding the impact of a new feature or change is key to help reduce noise as you start to push features into a continues testing cycle. This is where AI and ML can be applied to create and inspect links between items and the outcome of changes to one or more items.

Let’s imagine that a test has just failed, and a new bug has been created for a software engineer to resolve (all of which can easily be automated today). As the engineer sets out to resolve the bug, they end up changing multiple files that affect other parts of the solution. The engineer commits the code that resolves the initial bug but now a new issue is raised that was caused by this change.

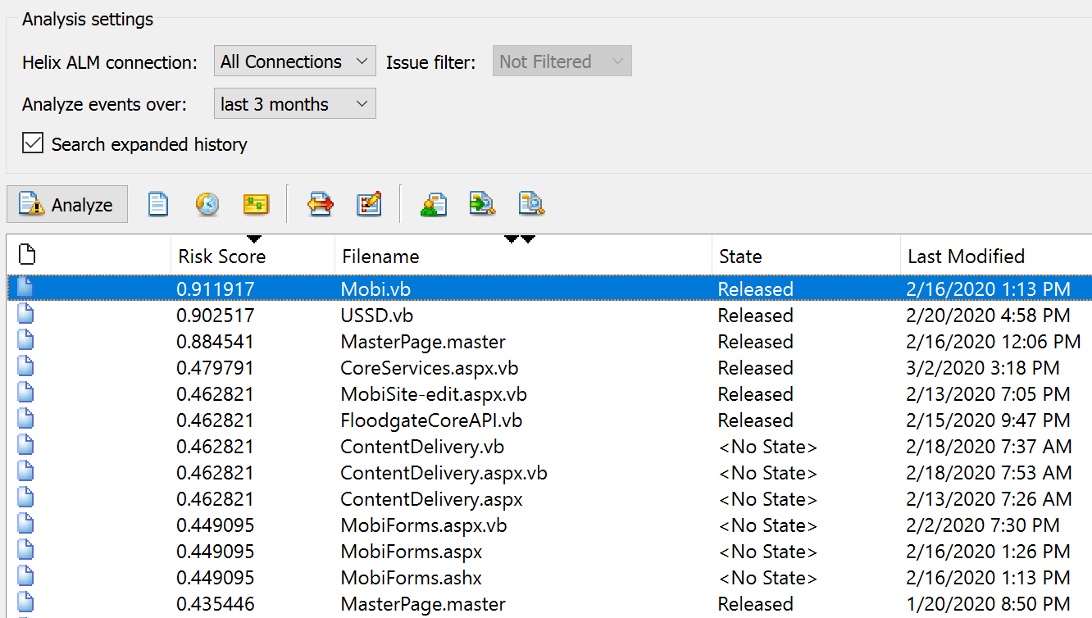

By analyzing the resulting bugs that are created using AI and ML, we could provide engineers with a set of areas that they will be impacting by making this change. Or, we could identify high risk areas in our source code repository. This can greatly reduce the impact of any changes that are made and help us understand what the impact of such a change could be before the change is committed.

Here is an example of ML applied to code repository that identifies high-risk code based on the number of changes — bug fixes applied to the individual files over time.

Applying AI to create these links between the issues (bugs), tests, and code commits can greatly improve the ability of any engineer to see the potential risk of a change to the repository. This can also be used to identify and create new test cases to help mitigate the risk of future changes.

Understanding Which Tests to Execute First

AI and ML can be applied to show which tests are relevant to execute first and which tests are relevant to the changes. The goal here is to run the tests that are most relevant first to catch bugs faster and then push it into the pipeline that has been defined.

Back to top

More on Test Case Management

This was a partial excerpt from the chapter “Leveraging AI and ML in Test Management Systems” in Eran Kinsbruner’s book Accelerating Software Quality. Kinsbruner is a testing expert and best-selling author. The book provides examples, recommendations, and best practices that will shape the future of DevOps together with AI/ML.

If you'd like to learn more about test case management and traceability, check out Perforce ALM (formerly Helix ALM). It integrates with Jira, and you can try it for free.