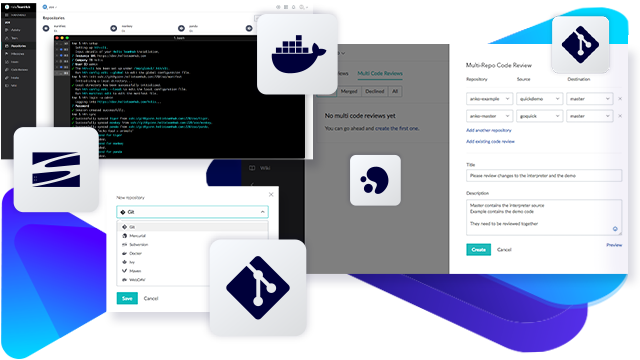

Source Code Repository Hosting

Your code repository software is where you store your source code. This might be a Mercurial, Git, or SVN repository.

Perforce TeamHub (Helix TeamHub) can host your source code repository, whether it’s Mercurial, Git, or SVN.

You can add multiple repositories in one project — or create a separate project for each repository.

Host Container & Artifact Repositories

TeamHub can host more than your code repositories. You can manage and maintain all of your software assets in one spot.

This includes build artifacts (Maven, Ivy) and Docker container registries. It also includes private file sharing through WebDAV repositories for your other binary files.

Bring Your Repos Together With Perforce Version Control

You can use TeamHub on its own or alongside Perforce P4 to maintain a single source of truth across development teams via P4 Git Connector. For example, you can keep large binary files in P4, then combine those files with Git assets from TeamHub in a hybrid workspace to achieve high build performance.

How It Works For Git Teams

- Developers use Git, hosted in TeamHub.

- They can commit changes, then collaborate and do code reviews in TeamHub.

- Git code (from TeamHub) gets combined with all of your graphics, audio, video, 3D, AR/VR, and other assets (from P4) via P4 Git Connector into your preferred build runner.

P4 Git Connector is a high-performance Git server (inside a Perforce server).

Perforce P4 is the best version control software for world-class development at scale.

Scale TeamHub to Fit Your Needs

TeamHub is available in the cloud or on-premises. Learn more about the flexible plans for teams of all sizes.