Surround SCM: Source Code Management for Perforce ALM

Surround SCM seamlessly integrates with Perforce ALM (formerly Helix ALM) to help you manage code, along with requirements, tests, and issues. You can work with any of these artifacts from either application. Use it to:

Collaborate on Code

Configure Workflows

Automate Workflows

Keep Artifacts Secure

Enhance Perforce ALM with Intuitive Source Code Management

Key Benefits of Surround SCM

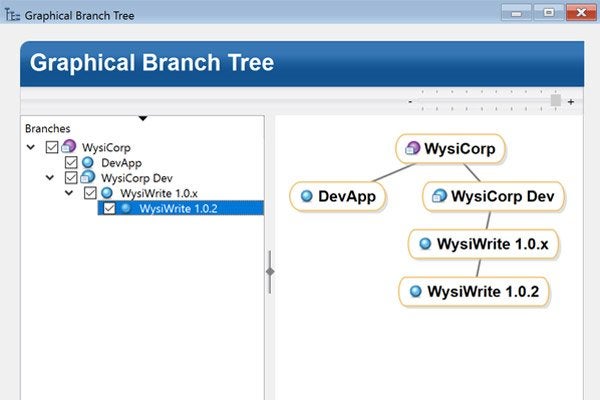

Flexible branching gives you complete control of how you manage releases and track configurations. With a variety of branch types, Surround SCM makes it possible to manage any project without forcing a specific process or methodology on your team.

Smart branches also retain extensive linking history, alleviating manual merge pain and ensuring that automatic code merges are right the first time.

Surround SCM comes with built-in code review. Comments are tracked with the file and can be reviewed for future reference. You can also integrate code review as part of your development workflow. This means you can block files from being checked into your build branch until they've passed the review.

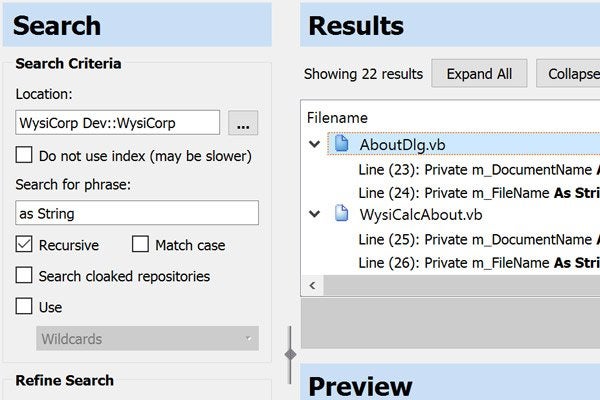

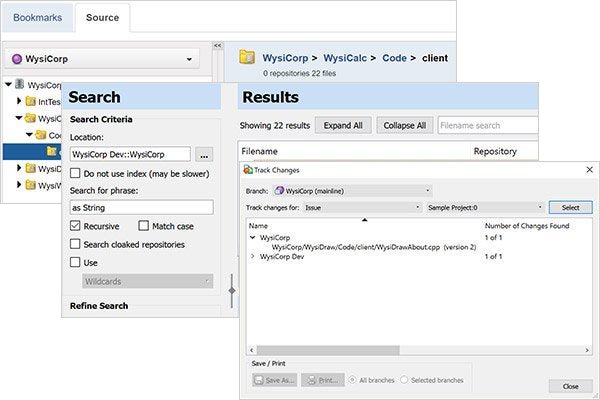

The find-in-file feature makes it fast and easy to find text in text files, as well as Word, Excel, PDF, and even OpenOffice documents. Wildcards and regular expression support ensure you'll find the text you seek.

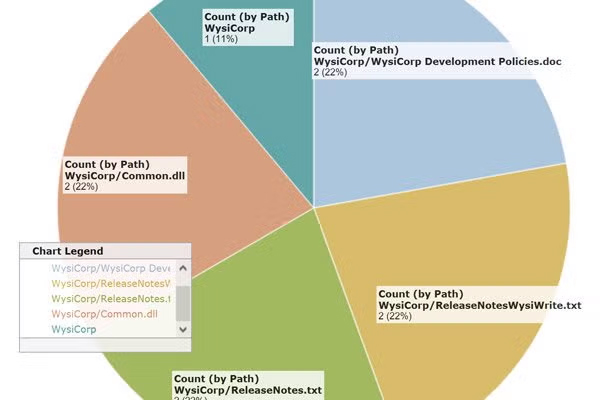

Surround SCM helps you keep management updated. Start with reusable filters to cut through the clutter and find your most valuable data. Then choose from a variety of detail, history, trend, and differences reports. The relational database management system (RDBMS) is also well-documented, so you can use other reporting tools if needed.

Get complete control over your product development artifacts, including code. Together, Surround SCM's branching and advanced labeling features are especially powerful, allowing you to efficiently manage your change process and releases as well as track configurations.

The integrated code review allows you to store reviewers' comments so they can be referenced as team members work with files.

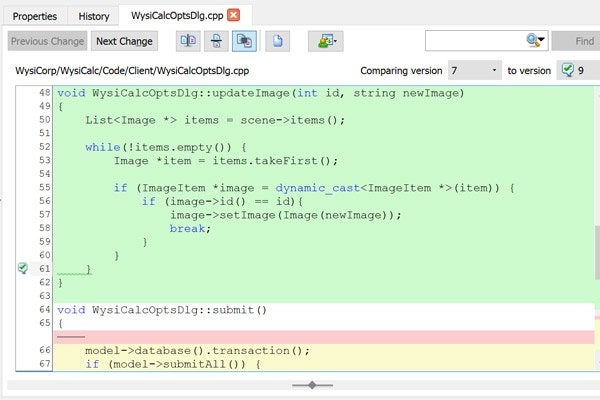

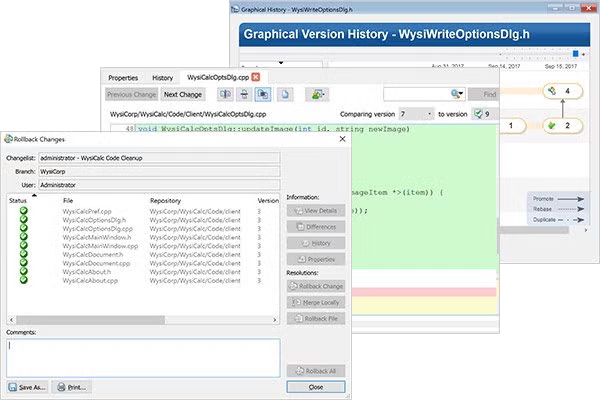

With Surround SCM you can easily see the full set of actions performed against a file across all branches. Interactively trace a file's history, see what changed between any two versions, and gain new insight into changes to your code. It's also a great way to see branch relationships.

Surround SCM's extensive changelist and atomic transaction support let you perform actions on groups of files and rollback or cancel operations to related files.

Surround SCM keeps you informed of changes with built-in email notifications. And the web client provides access from any browser, for when you need to work from a machine that doesn't have Surround installed.

Surround SCM has a rich set of built-in reports and report creation capabilities for analyzing file information and activity. You can even create custom change management reports that run outside Surround SCM.

When compliance is critical, Surround SCM lets you track electronic signatures, and can quickly run an audit trail report to review and validate signature records.

Surround SCM can tell you whether a file you are including in the build was code reviewed, help ensure design documents went through the review process, and control who can make changes to reviewed and approved files. Just tell Surround the workflow states your files can exist in.

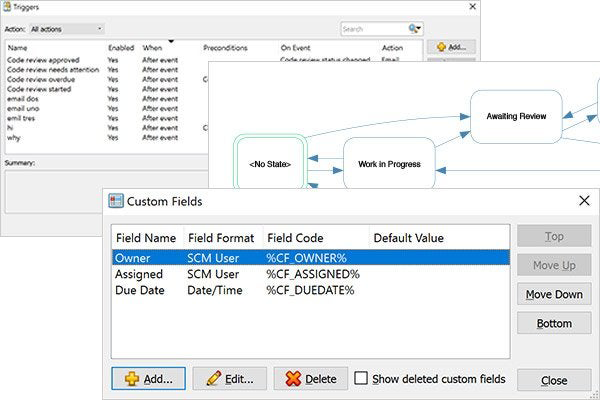

Once you define states, Surround SCM's configurable workflows let you control which actions you can perform on any file or branch based on its state. Don't want to allow files that haven't been code reviewed into the build? It'll help keep that from happening.

For a tailored fit to your change management process, Surround SCM's in-application, programmable pre- and post-triggers can automate workflow state transitions, enforce workflow rules, send email notifications, run external applications, modify custom fields, perform data validation, log information, and more. Most importantly, triggers are easy to create within Surround SCM.

Talk to an Expert

Have questions about Surround SCM, or want to add it to Perforce ALM? Get in touch with us today.