What's New in Perforce ALM 2025.2

Helix ALM is now Perforce ALM! Learn more about the name change.

Perforce ALM 2025.2 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

Organize filters and reports using tags

Administrators can now create tags for grouping related filters or reports, such as those for a specific product or release. If your team has a large number of filters or reports, use tags to organize them and help team members find them more easily in various areas of ALM.

Tags have a unique name, optional description, text color, background color, and icon to help users identify them at a glance.

To easily add or remove tags to a group of selected filters or reports, you can bulk change tags to apply the changes at once.

Add filters or reports as favorites for quick access

Add specific filters or reports as favorites to find them more easily. If you no longer want a filter or report to be a favorite, you can remove it from your favorites.

Use new filtering options to find relevant items in the Filters and Reports lists

New filtering options in the Display Options pane make it easier for users to find relevant items in the Filters and Reports lists. Limit the displayed filters or reports based on the allowed access, whether they are favorites, or by selected tags.

View and manage filters using the Filters list in the web client

You can now view all filters in a project in the Filters list in the web client. To access filters, click Filters in the navigation bar.

From the Filters list, you can perform the following actions on filters: view, add, edit, duplicate, add favorites, remove favorites, bulk change tags, export to Microsoft Excel, and export to text.

Learn more about these features in our blog: The Latest ALM Solutions: Filtering and Tagging.

What's New in Perforce ALM 2025.1

Helix ALM is now Perforce ALM! Learn more about the name change.

Perforce ALM 2025.1 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

Improved requirement document Specification Document window (desktop client)

The requirement document Specification Document window has many usability and performance improvements, and is now more consistent with the web client experience:

- View and edit requirement review workflow events in a document without switching to Review Mode, which no longer exists. You can hide review events in the Show notes list.

- Select requirement text without editing the requirement.

- Click an inline image to open a preview.

- Press and hold CTRL and rotate the mouse wheel button to zoom in and out on the requirement details pane.

- Multi-line text field width is no longer fixed and spans the width of the single-line columns or page, depending on which is larger.

- Inline images that are too wide are scaled down instead of cut off.

Add test cases to folders from the Automation Suites list (web client)

You can now add test cases to folders when editing and viewing automation suites and automation builds.

Include file attachment names in matrix reports (desktop and web clients)

You can now include item file attachment names in matrix reports. Use the %ATTACHMENT_FILENAMES% field code.

Other enhancements (desktop client)

- Scaling in the View Image dialog box is now more accurate and does not resize when navigating between multiple images.

- Inline images are now cached so they do not require downloading when viewing again.

- The Qt framework that the Helix ALM desktop client uses no longer uses WebKit and adopted WebEngine, which is Chromium browser technology. The new rendering engine is better tuned for multi-processing, uses a smaller memory footprint, and has a larger development community, providing improved support for HTML5 and security-related updates.

What's New in Helix ALM 2024.3

Helix ALM 2024.3 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

Addressed security vulnerabilities

Perforce consistently upgrades frameworks and modules used to build our software to address security

What's New in Helix ALM 2024.2

Helix ALM 2024.2 includes the following key features and enhancements. For a list of all new features, enhancements, bug fixes, and other details, review the release notes.

Configure Field Layouts to Organize Fields in Groups

Helix ALM administrators and other high-level users can now configure field layouts to organize fields in groups to improve the user experience in Add, Edit, and View Item windows/pages.

Watch this brief demo to see how easy it is to configure field layouts to help teams quickly access the information most important to them:

Each item type has a default layout you can use, or you can configure different layouts for specific security groups and requirement types.

To help users understand the purpose of a group of fields, you can provide a description of a group in a tooltip. To identify any problems with a field layout, such as missing required fields or fields included in the layout that are hidden by field security, you can evaluate the layout to generate a report to help with troubleshooting.

When upgrading the Helix ALM desktop client from an earlier version, fields that were previously displayed in the main area are now in the General group and custom fields are now in the Custom Fields group.

To learn more, see Configuring field layouts.

Enhancements for Users with Requirement Management Reviewer Licenses

In the Helix ALM web client, users logged in with a Requirements Management Reviewer license and a Test Case Management license can now generate test cases while reviewing a requirement.

Users logged in with a Requirements Management Reviewer license and an Issue Management license can now create an issue while reviewing a requirement.

What's New in Helix ALM 2024.1

Helix ALM 2024.1 includes the following key features and enhancements. For a list of all new features, enhancements, bug fixes, and other details, review the release notes.

Document Test Coverage Panel

You can now see the current state of testing using the new document test coverage panel available in requirement documents and requirement document custom reports.

Other Enhancements

What's New in Helix ALM 2023.4

Helix ALM 2023.4 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

Open Requirements in Microsoft Excel

Helix ALM now allows you to open requirements in Microsoft Excel directly from requirements documents.

Prevent Importing Automated Replies and Mail List Generated Emails

Helix ALM now filters out automated replies and mail list generated emails from being imported into an issue.

What's New in Helix ALM 2023.3

Helix ALM 2023.3 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

Webhooks

Helix ALM now supports webhooks. Configure webhook recipients and webhook automation rules to send a webhook when an issue, test case, test run, requirement, or requirement document is changed in Helix ALM.

Purge Server Logs Automatically

Configure Helix ALM to automatically purge server log entries from the Admin client.

Enhanced Image Viewing

Use the new View Images dialog to scroll through attached images.

Enhanced Control Over Automation Suite Builds

Helix ALM has added new command securities to give users greater control over automation suite builds:

What’s New in Helix ALM 2023.2

Helix ALM 2023.2 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

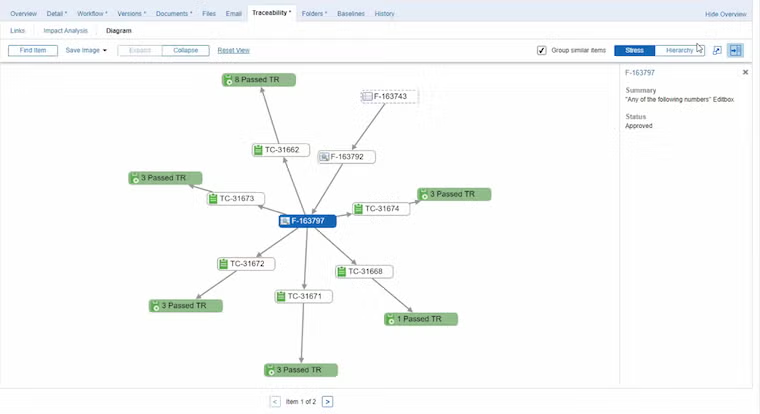

New Feature: Visual Traceability

Helix ALM 2023.2 introduces Visual Traceability, an interactive, dynamic traceability diagram that helps you visualize the relationships and dependencies between all the requirements, test results, issues, and other items in your workflow.

Because it allows you to easily view all artifacts related to any given requirement, this new traceability feature also helps you better understand the full impact of any change to a requirement before you make it. (Web)

Enhanced Requirements Management

Helix ALM has enhanced its requirements management capabilities:

- Requirement document field codes can now be used in snapshot labels.

- Classic MS Word and Excel export timeout values can now be configured as a local option.

Enhanced Searching

Searching on item numbers has been added to the list window find toolbar.

Other Enhancements

Creating an issue using the SOAP SDK now selects the active user when two customer records have the same name.

What’s New in Helix ALM 2023.1

Helix ALM 2023.1 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

Automated Test Results can now appear in the Matrix Report

You can now add automated and manual test results related to test cases (or linked to any other item type) to Matrix Reports.

Enhanced linking

Helix ALM has enhanced its linking capabilities.

- Links to requirements within a specification document view now scroll to the requirement in the document and select it.

- HTTP links to Helix ALM items in the Helix ALM desktop client now open the item in the desktop client if the item is in the same Helix ALM project.

Image scaling

In the desktop client you can now specify if the View Image dialog should scale the image being viewed to fit within the window or to display it at 100%.

Enhanced stamp formats

You can now use the new field code, %TMLT%, to add your local time to the project options stamp format.

Other enhancements

- Workflow event fields can now be specified as parents in field relationships to other fields in the same event.

- Download Excel File for reports now uses the report name instead of "matrix-report" for the downloaded Excel file.

What’s New in Helix ALM 2022.3

Helix ALM 2022.3 includes the following key features and enhancements. For a list of all new features, enhancements, and bug fixes, review the release notes.

Deletion of builds and automated test results

To handle scale of automated testing and ensure optimized performance, Helix ALM now provides deletion of builds and automated test results.

Protect builds and associated automated test results from deletion

You can protect a build to ensure the build and associated automated test results are not deleted from Helix ALM. Protected builds cannot be deleted manually by users or automatically by Helix ALM if automatic deletion is enabled for the project.

Automatically and manually delete builds and associated automated test results

If you integrate Helix ALM with Jenkins for automated testing, the number of builds and associated automated test results can quickly accumulate in a project. To remove old build data, you can manually delete unprotected builds and associated results or configure Helix ALM to delete the data automatically.

To delete builds and associated results automatically, an administrator or other high-level user must enable automatic deletion and set the number of days before the data is deleted from Helix ALM in the Automated Testing project options using the desktop client. Builds that are protected or have test results linked to other Helix ALM items are not deleted.

Automatic and manual deletion is tracked in the audit trail if detailed audit trail logging is enabled for the project.

Other enhancements

- Configure endpoints to support National Clouds when using the Exchange Online protocol for Microsoft Office 365.

- Improved performance when searching for items by phrase, all words, and any words.

What’s New in Helix ALM 2022.2

Helix ALM 2022.2 includes the following key features. For a complete list of features and enhancements, check out the release notes.

Out-of-the-box automated testing support

Test automation is an integral part of any development lifecycle across all types of products and industries. Helix ALM now provides more powerful, integrated automated testing support, giving you complete visibility and continuous traceability. You can quickly understand how automated test results impact test cases and see complete traceability between requirements, test cases, test results, and any related issues.

Using Helix ALM to manage your automated testing process can also help reduce or eliminate manual effort required to link test results back to test cases.

Note: Some automated testing features, such as working with automation suites and viewing test results, are only available in the web client.

Create test automation suites to group specific tests

Create automation suites to group test cases. You can organize suites by functional area, specific testing type, or any logical way that supports your testing needs. Creating smaller, focused automation suites helps you quickly view results for a specific testing area and identify failures so you can investigate the root cause. You can also see areas that are not yet tested and identify if additional tests need to be created for any test cases in the suite.

As you continue to build your test case library, you can add (append) new test cases to existing suites to ensure coverage.

Automatically submit automated test results back to Helix ALM from Jenkins or other automation tools

Helix ALM builds a bridge to your continuous integration/continuous delivery (CI/CD) pipeline. Regardless of your automated testing tool, you can automatically send test results back to Helix ALM and associate the results with test cases for complete traceability.

If you use Jenkins, use the Helix ALM Test Case Management plugin to automatically submit test results to Helix ALM. Freestyle projects and Pipeline are supported using the JUnit or xUnit XML report format.

You can also run Jenkins projects directly from automation suites in Helix ALM without switching to Jenkins. This is helpful if you need to rerun a specific test quickly.

If you use another automated testing tool, use the Helix ALM REST API to submit test results to Helix ALM.

You can use these methods in parallel if you use both Jenkins and other tools for testing.

View automated test result status

View summarized and detailed test result status for each automation suite to identify passed, failed, blocked, unknown, or skipped tests. View results by test case, build, or individual automated test. View status information for the latest build of an automation suite from Helix ALM.

Generate new test cases from automated test results

If a test result does not apply to an existing test case, you can generate a new test case from the result. When the test runs again, the results are automatically associated with the new test case.

Add related issues from failed automated tests

If an automated test fails, you can add a related issue from the test result to report the failure so it can be investigated and fixed. After adding the issue, you can trace back to see the test result it was created from, the test case, and the requirement, if linked.

Other enhancements

- Set margins when saving a report as a PDF file.

- In Helix ALM Web, set an option to always expand or collapse all requirements in the tree when opening a requirement document.

Helix ALM 2022.1

Helix ALM 2022.1 includes the following key features and enhancements.

Reply to All Email Recipients

When you reply to email from within Helix ALM, you can now click Reply All to reply to the sender and all recipients in the To and Cc fields.

Options for Downloading and Saving Reports

You now have more options for downloading and saving reports.

- Download time tracking reports as Microsoft Excel files.

- Optionally include text and background colors when downloading matrix reports as Microsoft Excel files.

- Optionally include page numbers when saving reports as PDF files.

License Usage Enhancements

Managing license usage is now easier on the Helix ALM License Server.

- Floating licenses are now reserved for a specific user for 30 minutes. When a user logs in, a floating license is reserved for that user for 30 minutes, even if they log out during the 30-minute period. If the user logs out and then logs in again during the 30-minute period, they will still be able to use the floating license.

- License server administrators can now view the remaining time for reserved floating licenses and the last activity date/time for a user in the license server admin utility. Admins can also view the number of all available, used, and reserved licenses in the support file generated from the admin utility.