What's New In P4?

Helix Core Is Now P4

You might have noticed that Perforce has a new look and a new logo that reflects our place in DevOps workflows. As part of these changes, Helix Core is now P4. While the product interface retains the Helix Core branding in this release, name updates and new icons to align with the P4 branding will be rolled out in a future update. To learn more, read the Re-Introducing P4 blog.

What's New Information Now in Documentation

We've consolidated our product release information into our product documentation. This change streamlines how you access important release information and ensures you can find all relevant updates more easily.

Where to Find Release Information

Going forward, refer to our documentation to learn what's new.

What's New in Helix Core 2024.2

Download PDF Full Release Details

“Our team is excited to bring you Helix Core 2024.2, filled with features designed to improve your team’s efficiency and enhance your workflows. With our new OpenTelemetry Protocol Integration, teams can now easily integrate their structured logs into observability platforms. Delta Transfers are also now available for syncs—extending upon the delta transfer for submits capability we released in 2024.1— leading to less data being transferred and shorter wait times.

Lastly, we just launched a new command, “p4 diagnostics” in Tech Preview to retrieve information about your server to assist in advanced troubleshooting. Please give it a try and send our team your feedback at [email protected] as we continue to refine the functionality.”

Senior Director of Product Management, Brent Schiestl

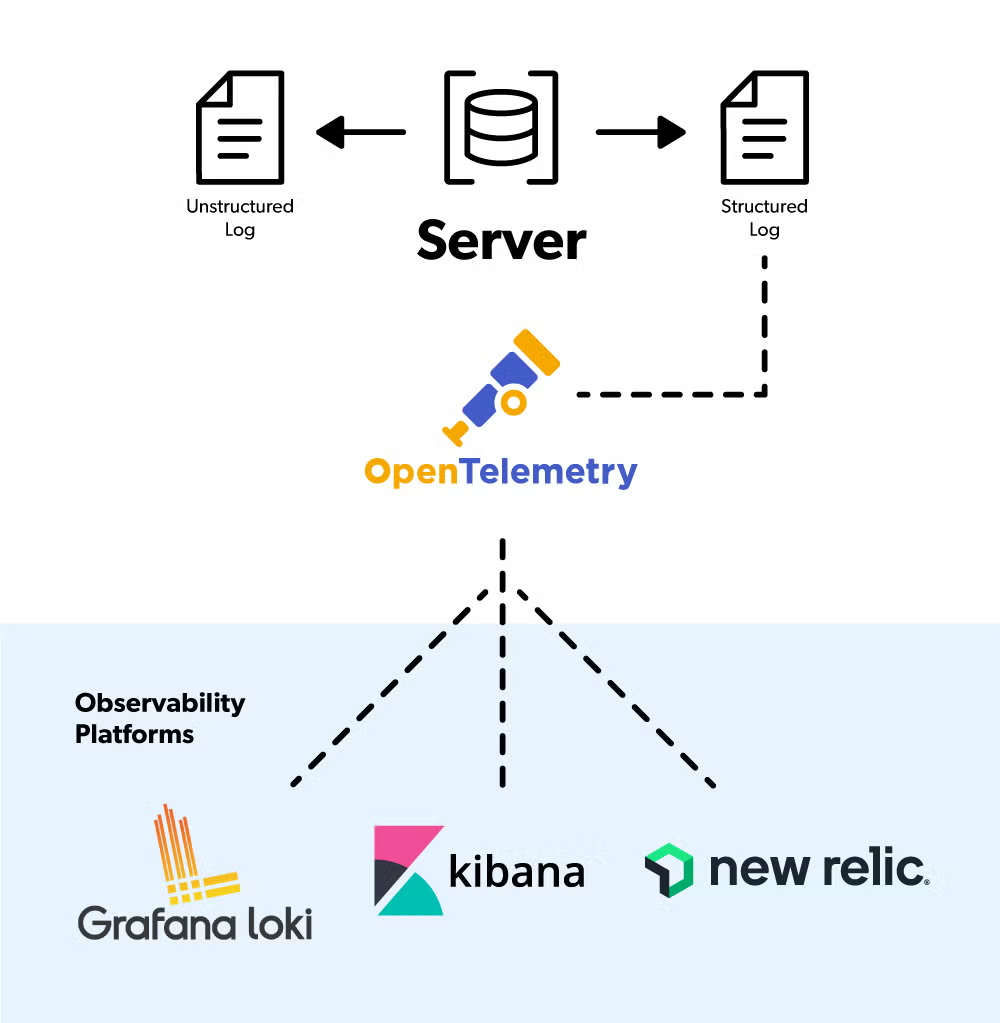

OpenTelemetry Protocol (OTLP) Integration for Structured Logs

The industry-standard protocol for integrating with observability tools is called the Open Telemetry Protocol (OTLP). With this enhancement, Helix Core structured logs now integrate seamlessly with OTLP, giving customers a convenient way to move these structured logs into observability platforms (i.e., New Relic, Grafana Loki, Kibana, etc.), where these logs can be locked down from being modified.

With this feature, message bodies are now exported via OTLP in JSON format via our new “p4 logexport” command, which makes them which makes them easier to use with other tools that need to process the information with the tradeoff of generating larger logs.

This functionality is only available on Helix Core servers running on the Linux x86_64 platform.

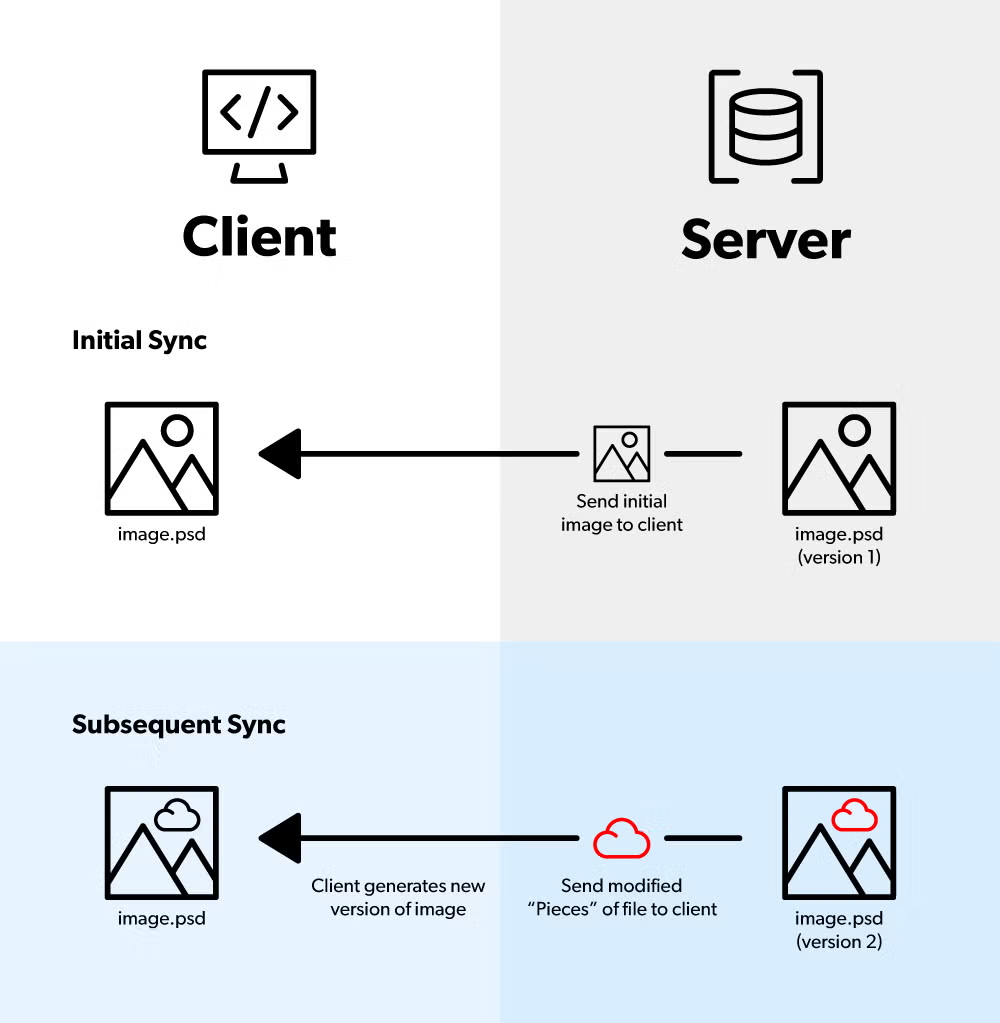

Delta Syncs of File Revisions

Previously, when an uncompressed binary file was synced down to a client, where the client already has a prior revision of that file, the entire file was transferred from the server to the client. With this update, now only the delta is transferred.

The user will experience the best performance gains when syncing down subsequent revisions that contain small edits to large files when there is latency between the client and server.

It is important to note that to start using Delta Syncs on P4 and P4V, users will need to update to P4 2024.2 and P4V 2024.4. To learn more, see the updated net.delta.transfer.minsize and net.delta.transfer.threshold configurables in the Helix Core Command-Line (P4) Reference.

Hot File Support for P4VFS

P4VFS is designed to only sync metadata on the initial sync request, speeding up the initial sync action by deferring the full download to future events that require it (ex: opening a file for edit). With this release, customers are now able to define rules for which files to always sync, via a new “hot files” specification. A “hot file” is a file that is never virtually synced.

The specification is created with the new “p4 hotfiles” command and holds rules which can be based on any combination of depot path (required), file extension (optional), and file size (optional). Rules can be defined on both the server and the client. Client-side rules will be combined with server-side rules for evaluation.

We have also removed the P4VFS client from “tech preview” status which means the client is now fully supported. It is important to note that the P4VFS client is only supported on the Windows operating system. For more details on how to use this feature, please refer to the Helix Core Virtual File Server (P4VFS) user guide.

Tech Preview: New “p4 diagnostics” Command for Advanced Troubleshooting

A new command, “p4 diagnostics”, now exists to seamlessly collect server configuration and diagnostic information for advanced troubleshooting. When run, the command will capture server configuration details as well as monitor, topology, current journal file, log file, and database lock information. With this enhancement, customers will be able to provide relevant and timely data to Perforce customer support for advanced server troubleshooting.

Previously, external scripts such as p4dstate.sh were required to be installed and running to collect this information. This new command does not require root access to the server and can be run by an operator user type or a user with admin privileges.

“p4 diagnostics” is only supported on Linux platforms and is being released in a tech preview status. Please provide feedback to [email protected] as our team continues to refine it.

Updates

- Several important CVEs addressed. See release notes for more details.

- Sparse streams functionality has been enhanced to now allow unshelving from any stream into a sparse stream.

- Labels can now be ignored when using the “p4 obliterate” command.

- Multiple filtering enhancements have been added, including:

- Changelist filtering:

- Can now be filtered by the use of * wildcards in expressions enclosed in quotes when searching based on client workspace name.

- Can now be filtered using more than one user value.

- Can now be filtered using more than one client workspace value.

- Client workspace filtering:

- Can now be filtered wildcards in expressions enclosed in quotes when searching based on the owner.

- Changelist filtering:

For more details on these updates, please refer to the Helix Core Command-Line (P4) Reference.

Other Tech Preview Promotions

- The following features have also been promoted from Technology Preview:

- ‘p4 topology’: introduced in Helix Core 2021.2, this command enables super and operator users to list all Helix Core servers that are directly and indirectly connected to the server on which the command is run.

- Helix Core Server System Resource Monitoring: introduced in Helix Core 2023.1, with this feature, admins can rely on built-in mechanisms to monitor CPU and memory utilization across their server and proactively pause or even terminate Helix Core operations to prevent a server crash or outage.

In Case You Missed It

- In Helix Core 2024.1, we introduced lightweight branching via Sparse Streams, enabling teams to instantaneously create new branches.

- In Helix Core 2023.2, users gained the ability to connect Helix Core archive depots to S3-backed (or S3-compatible) cloud object storage—a more cost-effective and scalable storage solution. In Helix Core 2024.1, this support was extended to local and streams depots.

What’s New in Helix Core 2024.1

Download PDFFull Release Details

Lightweight Branching via Sparse Streams

Instantaneously Create New Branches

Sparse Streams is a highly anticipated new feature that gives users a way to instantaneously create a stream (branch), accelerating your team’s workflow.

With this new lightweight branching option, files are only branched as needed and new streams aren’t populated with all the metadata from the files in the parent stream. This significantly reduces the amount of data stored per branch and saves time and resources. Sparse Streams also allows teams to implement common branching methodologies, such as "branch per bug” and “branch per feature”.

Watch this on-demand webinar for a walkthrough and demo of Sparse Streams or read our Helix Core Command-Line (P4) Guide for information on how to get started.

Delta Submits of File Edits

Improve Efficiency by Only Submitting the Changes

Traditionally, when an uncompressed binary file is edited and submitted to the depot, the entire file is transferred from the client to the server. With this new feature, now only the delta is submitted.

The user will experience the best performance gains when working on small edits to large files when there is latency between the client and server. The functionality can be controlled (and even switched off) by the new net.delta.transfer.minsize configurable, which can be set on both the client and the server.

It is important to note that this increased performance only applies to edits of files that have previously been added to the depot and it will not impact the initial add process. It does, however, apply to edits of files that were originally added to the depot prior to upgrading to this version of Helix Core.

Backup-Eligible Partitioned Clients

Reduce Slowdowns and Utilize Partitioned Clients for Daily Work

With this enhancement, certain partitioned clients are now eligible for back up (checkpointing and journaling) as well as recovery.

The user will see a new type of client called “partitioned-jnl”. The “jnl” stands for journal, which is part of the backup mechanism within Helix Core. When the user creates a new client, they can choose this new client type. The advantage of using this type of client is that it can reduce central contention on the server since each partitioned client gets its own unique database table on the server to track which files the client currently has.

Increased S3 (and S3-Compatible) Object Storage Support

Gain a cost-effective and scalable storage solution by storing depots to S3-backed (or S3-compatible) cloud object storage.

S3 Object Storage Support is now supported for the most popular depot types. In our last Helix Core release (2023.2), this support was limited to only archive depots.

This update enables users to store local and stream-based depots onto S3 storage and potentially save up to 90% in costs compared to the price of block storage.

Linux ARM 64-Bit Support

Enhanced Platform Support

The Helix Core Server (P4D), Helix Core Command-Line Client (P4), Helix Proxy (P4P), and Helix Broker (P4Broker) can now be run on Linux ARM 64-bit hardware.

To get started, follow the same process for downloading and installing new binaries.

Other Notable Enhancements

- Commit servers are now able to verify and fetch missing/corrupted archives from edge servers.

- Comment support has been added to the client, label, branch, and typemap specs. In addition, comments can now be required when changing server. configurable values via a new dm.configure.comment.mandatory configurable.

- A new configurable, db.journalrotate.warnthresh, exists to warn on login if a journal rotate hasn’t been initiated in X days.

- A given client and all of its shelves can now be deleted via a single command, p4 client -df -Fd <client>.

What’s New in Helix Core 2023.2

DOWNLOAD PDF FULL RELEASE DETAILS

S3 (and S3-Compatible) Object Storage Support

Gain a cost-effective and scalable storage solution by storing archive depots to S3-backed (or S3-compatible) cloud object storage.

Users now have the ability to connect Helix Core archive depots to S3-backed (or S3-compatible) cloud object storage. S3 object storage support offers users a durable and convenient solution that grows automatically and indefinitely.

Archive depots are well suited for storing assets that require less access (i.e., past seasons of a show or older video game releases which don’t require frequent updates).

To start storing archive depots in S3, users will specify the following while creating a depot:

- The location of the S3 bucket.

- The credentials needed to access the bucket.

See the Helix Core Server Administrator Guide for more on archive depots.

Configurables Improvements

Easily sort through the available configuration options and understand system recommendations for optimal performance.

Configurables give administrators the ability to customize a Helix Core service. In this release, we have added more specific in-system descriptions to note which configurables require a server restart, as well as provide a range of valid values with included recommendations (where applicable). Additionally, admins now have the ability to comment alongside value changes for better traceability and documentation.

Users will enter the new command “p4 configure help” to take advantage of this enhancement.

Proxy Cache Self-Cleaning

Efficiently manage available disk space and reduce the manual maintenance needed for your proxy servers.

Proxy servers have often required manual and extensive maintenance that only increases as the number of servers grows. With proxy cache self-cleaning, admins can automatically purge any files that haven’t been accessed in a specified number of days.

This feature reduces the manual workload required in proxy server maintenance, enables users to efficiently manage proxy server disk space, and eliminates the potential human error of purging files that shouldn’t be removed.

Notable Enhancements

Structured Logging Update:

We will now log the number of files sent and received by the server, proxy, or client – as well as the total size of files sent and received. This update will enable users to troubleshoot performance issues more efficiently. Refer to the release notes for more information on the limitations of these enhancements (including proxies, forwarding replicas, and brokers).

License Management Optimizations

We’ve made license changes better, by now allowing users query their Helix Core server for a list of valid IP and MAC addresses prior to making a license change.

Users can also validate the IP/MAC address on the physical license file before proceeding with a license change. In addition, we’ve minimized the number of instances where a server restart is required when making a license change to the server for added convenience.

What’s New in Helix Core 2023.1

Download PDF Full Release Details

Virtual File Sync

Lessen sync wait times and save space with Virtual File Sync.

Virtual File Sync is a highly anticipated feature that provides users with the ability to sync only the file metadata on the initial request, and later choose to download the full file content when needed.

Virtual File Sync will accelerate sync times for teams working with large file sizes and reduce the amount of data transferred from the server to the user’s local machine. This feature will add speed and cost efficiency to use cases that do not require full downloads of large assets, such as shaders, textures, and 3D models.

To realize the benefits of Virtual File Sync, an admin first needs to upgrade their Helix Core Server to version 2023.1. Then each Helix Core user will need to install and run the Helix Core Virtual File Service (P4VFS)* client agent on their individual Windows workstations. Clients that support P4VFS (i.e., P4V 2023.2, P4D 2023.1) can then be used to create a new workspace with the new “altsync” property enabled.

(*P4VFS is currently available as a Tech Preview exclusive to Windows clients.)

Helix Core Server System Resource Monitoring

Proactively pause and terminate operations when limits are exceeded.

No team wants to experience downtime. With Helix Core Server System Resource Monitoring, admins can rely on built-in mechanisms to monitor CPU and memory utilization* across their server and proactively pause or even terminate Helix Core operations to prevent a server crash or outage. Helix Core Server System Resource Monitoring is designed to prevent large spikes of resource usage by helping to spread the resource load over larger periods of time.

(*System Resource Monitoring is currently available as a Tech Preview feature and some capabilities are operating system dependent.)

Multi-File Checkpointing

Generate multiple checkpoint files for a given database table.

Multi-File Checkpointing enables users to generate multiple checkpoint files for a given database table, as well as apply checkpoint restore and dump operations on multiple checkpoint files. With Multi-File Checkpointing, your overall checkpoint duration is no longer gated by the size of your largest database table. Continue following Helix Core best practices for backing up metadata with more efficiency than ever before!

Content Distribution Server

Easily and securely share source code with partners and collaborators.

The new Content Distribution Server adds a data exchange layer between your primary server and any third-party partners. Admins can set up and manage a set of read-only access users independent from the primary server with this new server type. There is also now a way to maintain 1:1 content mapping and ensure traceability with third-parities (which can be used with or without this new server type) — making it easier to securely share source code with partners and collaborators. Contact us to get a license and get started today.

What's New in Helix Core 2022.2?

Download PDFFull Release Details

Support for Writable Streams Components

Ultimate flexibility in defining Component and Consuming Stream relationships.

In 2022.1, Streams Components were introduced. This feature allows users to do component-based development using Helix Core Streams without the error-prone and manual effort of keeping path definitions in sync.

In this release, we have added support for Streams Components to be writeable in addition to read-only, allowing users to submit changes to the Component Stream directly from a Consuming Stream. The two new writable Component Types, “writeall” and “writeimport+”, give teams granular control in defining which files a Consuming Stream can submit for change to a Component Stream.

Rename Client Workspaces

Workspace names can now be edited after initial creation.

Projects evolve, hardware changes, and the naming convention you used for your client workspace at initial inception may no longer fit your needs. With this release, those less-than-ideal client workspace names can be adjusted.

By default, superusers and admins can rename any client workspace (regardless of ownership), and individual users can only rename workspaces they own. This setting is configurable by the Perforce admin.

Max Memory Limitations for User Groups

Reduce the risk of server performance issues by temporarily rejecting commands when a memory limit has been exceeded.

Admins can apply memory limits on specific user groups to help prevent a server from running out of available memory. This feature runs a check on every operation a user in a group with memory limits invokes.

If the predefined memory threshold is exceeded at the time an operation is submitted, the operation will fail without delay, which, after consuming time and resources, is preferable to failing. In addition, this works nicely for automated and novice user groups who can sometimes be unpredictable.

Parallel Checkpointing

Significantly speed up the process of taking server checkpoints.

As databases grow, so does the amount of time it takes for checkpoints to complete. Admins can now define how many “threads” the checkpoint operation can use, spreading out the workload across all database tables being checkpointed, and reducing the time to complete the operation. The most significant time savings will be for those with database tables of approximate comparable size because a given database table can only have one thread allocated to it. This new parallel functionality also applies to the checkpoint restore and dump operations.

What's New in Helix Core 2022.1?

DOWNLOAD PDF FULL RELEASE DETAILS

Real-time Debugging

Now you can easily inspect the root causes of server issues as the issues are actively occurring.

Admins can now “turn up” the monitor level of their server and the change will monitor all running processes. When the root cause of the problem has been resolved, admins “turn down” the monitor level. Customers can pair this enhancement with real-time monitoring (released in Helix Core 2021.1) where real-time monitoring can raise the alarm bells and real-time debugging can help efficiently identify the source of the problem.

To do this, set a new server configurable, rt.monitorfile, from the command line interface. Once this configurable is set, any changes to the existing server configurable, monitor, that result in a value of 10+ (10 or 25), will unlock the full potential of the enhancement.

Streams Components

Reuse components across projects by isolating the “common component” into its own stream.

No longer do you have to manually keep stream views in sync with one another. Instead, you can now define component relationships between streams and test these relationships in isolation before checking in changes to the stream spec.

You can define other streams as components in the new “Components” section of the stream spec. Defining a component requires you to specify a:

- “Component type”,

- “Component folder”, and

- “Component stream”.

For this initial release, the only valid “component type” is “read-only”. This means that consuming streams cannot submit updates to the component streams via their import+ paths. The “component folder” is the directory prefix for each component view file. The “component stream” is the name of the stream that you are defining as a component. This can be specified as a specific change revision using either @change or @label (automatic label). Component nesting is also supported (ex: stream A defines stream B as a component and stream B defines stream C as a component). Circular component relationships are disallowed, however.

See more on component-based development >>

Failback of Planned Failover

Save time on the process of failback and failover with a new sequence of commands that eliminate reseeding.

Reseeding is no longer a required manual step! A new sequence of commands make it simpler to orchestrate the process of failing over, swapping server roles, and eventually restoring the original server roles.

After failover (using ‘p4 failover’), use the command p4d -Fm, which will reconfigure and get the server ready to be the standby. When you are ready for failback, run the `p4 failback` command on that standby to restore the old server. Then another command, p4d -Fs, will reconfigure the former standby to become the standby again.

Other Notable Enhancements

Changes to the 'p4 print' command: The command now includes 'offset' and 'size' flags to print out partial contents of a file instead of the entire file contents. This enhancement is compatible with both text and binary file types.

Changes to text files: To improve the performance of the network and replication, text files stored as compressed now remain compressed through the transfer phase to the client. The client is now responsible for the decompression. This feature applies to clients 2022.1 and later and does not apply to +k type text files.

Changes to `p4 sync/revert/clean/integ/copy/merge/undo/submit` commands: These now have a '-K' flag that will suppress the expansion of keywords in +k type files.

Improvements to stream deletion handling: Stream deletion is now tied to a changelist, making it possible to find the history of stream deletions as well as view the stream spec of a deleted stream @change. It is also now possible to obliterate a deleted stream’s metadata.

What's New in Helix Core 2021.2?

DOWNLOAD PDF FULL RELEASE DETAILS

Streams Enhancements

It has never been easier to understand how changes to an asset can impact existing streams!

Streams now supports a key traceability feature named “view match”. Simply pass in a depot path — which could represent a folder or a file — and see all streams that are generating a view that includes that depot path. You can optionally filter the results by criteria such as path type (i.e., only show me streams which are importing a specific depot path). This feature helps give you better understanding the ramifications of updating an asset. For example, when you need to fix a bug in a source file and want to understand all streams that may be importing that source file.

Streams now also supports one-to-many mapping functionality (i.e., import&). This means a depot path can map to more than one location in a workspace. For example, you can import two different revisions of the same file into a separate location within a workspace. This can be useful when working with images, where you may want two different revisions of the same image imported into a project. Files imported via one-to-many mapping are read-only.

Global Lock Visibility from Edge Servers

This big change to global locks lets you stop throwing away work and increase your development velocity.

Users connected to edge servers can now understand if another user has an asset globally locked via a different edge (or commit) server, regardless of file type and file type modifiers. This prevents the scenario where two different users are connected to different edge servers but are working on the same non-mergeable file – which can result in the need to throw away one set of changes. Reporting of such locks when users open files can be enabled with a new server configurable `dm.open.show.globallocks` and the p4 fstat command has a new `-OL` flag for requesting the global lock information.

Server Topology Reporting (Tech Preview)

A new p4 command has been added to make it easier to train new Helix Core administrators, help Perforce Support troubleshoot customer issues, and engage with our Perforce Consulting team for topology reviews.

Keeping track of your Helix Core servers can be a challenging task, especially as you deploy new servers when you scale. A new command, p4 topology, can be initiated by super and operator users to list all Helix Core servers that are directly and indirectly connected to the server on which the command is run. This includes standard, commit, replica (including edge), proxy, and broker servers.

Please reach out to us with feedback so we can improve the feature and remove the “tech preview” label from it in a future release.

P4TRUST Updates

Great news for admins: you will have fewer questions from end users about trusting new server fingerprints.

P4TRUST is no longer required for SSL connections where the server provides a certificate that's not self-signed and can be verified by the client. Clients based on the 2021.2 C/C++ P4API (including derived APIs) will now attempt to verify the server's SSL certificate against the local system's CA certificate store. One major benefit of this enhancement is that it will now be much easier to roll certificates or change server IP addresses. For customers with thousands of clients this is a game changer since clients will not be prompted to trust the new fingerprint.

P4TRUST can still be used in conjunction with certificates, which is likely desired when the certificate hasn’t been issued by a Certificate Authority, and P4TRUST can be set based on hostname in this use case.

What's New in Helix Core 2021.1?

DOWNLOAD PDF FULL RELEASE DETAILS

Real-Time Monitoring

Ever wanted an easier way to spot a potential problem prior to hearing about it from your end users? Real-time monitoring is now a possibility within Helix Core!

With added integration with leading tools like Prometheus, you can monitor metrics like the number of commands waiting on a lock, the number of current client connections to a given server, and the number of bytes of journal a replica is behind by without having rely on retroactive log output. Check our blog for more detail on implementing real-time monitoring.

Our Professional Services team can help you install a comprehensive Prometheus/Grafana monitoring for your Helix Core topology with dashboards and alerting based on the new real-time metrics as well as other key metrics as described:

<< https://github.com/perforce/p4prometheus

In addition, for those using Prometheus, a new utility, p4mon-prometheus-exporter, is also now available for your convenience on the Linux x86_64 platform. Look for additional monitoring metrics in future releases based on your feedback.

<< Get p4mon-prometheus-exporter

Streams Enhancements

Files residing within task streams can now be obliterated as desired with the newly added “-T” flag to the existing p4 obliterate command. This is helpful for reclaiming disk space or for cleaning up mistakes made by users who create file hierarchies in the wrong place.

Stream switching across depots is now officially supported as well, with the most common use case being a task stream that lives in a different depot than its parent stream. Look for added P4V support for these stream enhancements soon.

Verify Enhancements

The p4 verify command is used to ensure server archives (depot files) are complete and without corruption. A new --only filter flag, where the actual filter values can be either BAD or MISSING, can be used to return only a subset of results from the verify command. The MISSING filter will avoid checksum calculations during the verification process for added efficiency. These filters can also be used with the -t flag to transfer only those matching files.

You can also now use a -R flag to repair missing files if identical content is found in an existing shelf. Lastly, optimizations have been added to the -z flag to improve memory usage. Gain more trust in the state of your archives in less time!

Integration Engine Enhancements

Multiple integration scenarios have been improved in this release of Helix Core. The first is when a file is moved or renamed and then a second file is added under the original name as the first file. The readded file can now be resolved after all other integrations have been resolved and all resolves are now submitted together.

In addition, a new configurable named dm.resolve.ignoredeleted has been added to change the default behavior of the scenario where a file has been deleted in branch A but not in branch B.

Lastly, it is now possible to move or rename a file where either the target path is a substring of the source path, or vice-versa.

License by MAC Address

With more and more customers deploying at least parts of their Helix Core infrastructure to the cloud, it is now possible to issue licenses tied to a MAC address instead of an IP address. As IP addresses tend to be more fluid in the cloud, a MAC address will alleviate the need to request duplicate licenses over time.

What's New in Helix Core 2020.2?

DOWNLOAD PDF FULL RELEASE DETAILS

Stream Spec Integration

Configuration as code is an inspirational mantra for many of our customers. Just like you’ve been able to integrate file changes across Streams, you can now integrate stream spec changes (for propagatable field values) across Streams as well.

In fact, you can even integrate file changes and stream spec changes in the same atomic operation (if desired). The integration status indicator (istat) can now report what content needs to be integrated across Streams (only source code changes, only Stream spec changes, or source code changes, and/or Stream spec changes).

Stream spec integration can be turned on or off globally via a new server configurable. Don’t reinvent the wheel, manage your configuration as code with Helix Core!

Controlled Stream Views

Historically, child streams automatically inherited the views (paths, remapped, and ignored field values) of their ancestor (parent) streams. If you are using component based development (CBD), this complicated release streams that may want to be frozen in time, for example.

A new setting, Parent View, now exists on stream specifications to control view inheritance. Existing streams can be converted from “inherit” (the longstanding behavior) to “noinherit” (and vice versa). In addition, when converting to “inherit” to “noinherit”, you can have the system add comments into the stream spec detailing out where the views originated from. Customers can control the initial parent view setting based on a new server configurable. Easily employ CBD best practices with Helix Core today!

Shelved File Storage Optimizations

Shelve operations involving files will now only create new archive content when necessary. If a given file already exists via another shelf, instead of duplicating the archive content, the new shelf will simply reference the existing archive content.

This can result in substantial file storage savings if you frequently employ the shelve operation (ex: using Helix Swarm for code reviews) or if you are shelving very large files. This enhancement applies to new shelves created post-upgrade only. Scaling with Helix Core just got even easier!

Minimal Downtime Upgrade Enhancements

A new “p4 upgrades” command now allows you to monitor the status of an upgrade (ex: pending, running, completed, etc.) for a particular server or across all upstream servers in a multi-server environment. In addition, all upgrade steps introduced in versions 2019.2+ will now execute in the background, which can improve server availability and replication performance during an upgrade event. Easily stay up-to-date with new major Helix Core releases.

P4 Failover Enhancements

If a failover attempt does not succeed for any reason, any journalcopy or pull threads that were stopped will now be automatically restarted for you. Additionally, a failover event now displays pertinent details about the source and target of the failover process at the start of the command, including in a preview mode. This gives you a necessary sanity check before invoking a failover event. Fail over with confidence using built in functionality instead of having to write your own custom scripts!

What's New in Helix Core 2020.1?

DOWNLOAD PDF FULL RELEASE DETAILS

SSO with Helix Authentication Service (HAS)

Simplify and standardize your authentication process with Helix Authentication Service (HAS). It enables you to integrate Helix Core (including clients and plugins), Helix ALM, and Surround SCM with your organization's Identity Provider (IdP). When used in conjunction with Helix Core, it requires the use of the Helix Authentication Extension.

HAS currently supports the OpenID Connect and SAML 2.0 authentication protocols. This service is internally certified with Microsoft Azure Active Directory (AAD), Okta, and Google Identity.

Protections Optimizations

The P4 Protections Table has been optimized to allow you to more easily manage permissions. If you have a complex network topology, you can now assign multiple IP filters to a single protects entry.

If you have federated architecture and have a large number of protections entries, the replication overhead has been reduced. This reduces overall network traffic and wait time for file access in certain scenarios. Also included in this release is the ability to check protections based on a particular host.

P4 Failover Enhancements

Monitor the status of a target server via the new p4 heartbeat command. This more proactively identifies conditions in which a manual p4 failover event should be considered. New triggers and extensions include:

- heartbeat-missing

- heartbeat-resumed

- heartbeat-dead

New configurables are also available to tailor heartbeat monitoring to your needs. These include setting the desired interval time between heartbeat requests, defining the number of consecutive missed heartbeats to be declared dead, and more.

Stream Spec Permissions

You can set permissions for configuration changes just like files. Permission options on stream specs include read, open, and write. These permissions are also fully supported by P4Admin — the GUI for administrating Helix Core connections, depots, users, and groups.

Global Logging IDs

When working with structured logs, you can now correlate activity between two servers. Using the global logging ID, you can link activity on one server — for example an edge server —with activity on another server, like a commit. This makes it easier to troubleshoot issues across your topology.

What's New in Helix Core 2019.2?

DOWNLOAD PDF FULL RELEASE DETAILS

Customize Stream Spec Definitions

Admins can now add additional fields to a stream spec and have custom field IDs automatically assigned. These fields can store additional metadata related to a stream, and convey information about your particular framework.

This is especially useful if you have implemented component based development (CBD) or IP re-use for your team/organization. As an added bonus, these custom fields will also be visible in P4V. Check out the new p4 streamspec command for more information.

Independently Upgrade Your Servers

Keep your teams working during major version upgrades with improved upgrade performance. Now it is possible to achieve minimal downtime for end users when upgrading a federated installation of servers.

Update commit and edge servers separately without having to take all servers offline at the same time. This applies only when upgrading from 19.1 to 19.2 and beyond. It saves you time and ensures your teams remain productive.

Structured Log Versioning

Now you can control when you want to move to an updated schema to take advantage of new improvements. With this release, structured logs have a formal, versioned schema that you can use and test before upgrading.

This decreases your risk of experiencing unanticipated “breaking changes” to existing internal processes or monitoring that is connected to structured logs. This release also:

- Enables easier integration with external log monitoring solutions.

- Adds previously missing database statistics.

Version Server Configurables

When a server config variable is changed, a versioned history will now be stored on the server. View the server configurables history with the new p4 configure history command.

This gives you better understand historical changes that have been made over time. And you can more easily troubleshoot issues that might happen due to changing server settings.

Verify Files More Quickly

Verify archived files easier with the new “-Z” flag for p4 verify which is built on top of db storage. It allows you to quickly search through your system without needing to look through lazy copied files.

Obliterate in One Step

Purge and archive in a single step with the new “-p” flag for p4 obliterate. Now you can remove versioned files and keep the metadata with just one command.

Find Orphaned, Archived Files

Scan archives to find files that were left over from failed submits or archive-skipping obliterates. Orphaned archive file detection utilizes db.storage, which was introduced in 2019.1. Look for the new “-l” and “-d” flags for the p4 storage command.

What's New in Helix Core 2019.1?

DOWNLOAD PDF FULL RELEASE DETAILS

Increase Performance for p4 obliterate

The p4 obliterate operation is now faster and less resource-intensive. Files are computed in a fraction of the time compared with previous versions. Dramatic improvements have been noted in benchmarking. Contact your account team to learn more.

Improve Productivity With p4 submit Option

A new option improves developer productivity for p4 submit. Admins can configure p4 submit to initially commit only meta-data from the edge server to the commit server. The actual file content transfer is scheduled in the background for reverse replication. Developers do not need to wait for the files to transfer. This option can be set as the default transfer method. Depending on the size of files, and frequency, the performance improvement can be dramatic.

Customize Workflows and Extend Product Functionality

Extensions are a new way for administrators to customize workflows and extend the functionality of the server. Triggers and extensions perform similar functions. Extensions are written in the Lua language, and are versioned and managed in their own central depot as self-contained bundles of code, metadata, and other assets. They have a closer integration with tools, and include a programmatic API. The extension code runtime is embedded within the server executable, so you no longer need to update external language systems on each of your servers.

NOTE: This functionality is currently unavailable for the Windows platform.

Gain Flexibility With Private Editing of Streams Spec

Perforce Streams gains new flexibility. Developers can privately edit stream specs, modifying only their own workspace without impacting other users or the project. Then code changes and/or stream spec changes can each be committed atomically.

Enhance Visibility for Git LFS Locking

Helix Core 2019.1 supports Git LFS locks. Locks can be created and are visible using Git clients or the P4 commandline. Using Perforce clients, Git LFS locks are seen when you try to open the locked file. With Git clients, the lock is displayed during a commit.

What's New in Helix Core 2018.2?

DOWNLOAD PDF FULL RELEASE DETAILS

Simpler Command to Execute Failover

The new P4 failover command simplifies the process of initiating failover from a master to a standby server. This command consolidates several discrete tasks into one command line with arguments, and enhances administrative control for planned and unplanned service interruptions. You can also designate an optional “mandatory” standby server to better prepare your failover strategy.

Support for SAML 2.0 Authentication

Integrate your 2018.2 Helix Core server and clients with Helix SAML to authenticate users via the command-line or client using popular solutions, such as Ping Identity, Okta, and others.

What's New in Helix Core 2018.1?

DOWNLOAD PDF FULL RELEASE DETAILS

Failover Performance

The 2018.1 release provides reliable and consistent replication of standby servers in a disaster recovery (DR) setup. Failover to a standby server in a DR scenario is significantly faster due to multiple steps being removed from the process via automation and a new replication method. This improved operational efficiency will result in reduced time and cost to recover service from a failure.

Tightened Security

Admins can now hide the following sensitive server information from appearing within “p4 info”: server name, server address, server uptime, and server license IP address. For example, the license entry in “p4 info” will show “licensed” or “unlicensed.”

Improved Streams Usability

The 2018.1 release improves the usability of Streams by no longer requiring users to write long specs. Now, you can use wildcards to represent which folder and/or files are included in the path name of a Stream view.

What's New in Core 2017.2?

DOWNLOAD PDF FULL RELEASE DETAILS

Speed Up Remote Operations Even More with WAN Accelerators

The 2017.2 release allows you to use WAN acceleration technologies. WAN acceleration can be a great enhancement that dramatically increases the likelihood that remote sites are in sync at all times with central servers in a Perforce federated architecture deployment because the replication is quicker — even with extremely large files.

Boost Stability and Performance

Parallel sync operations are one of several techniques Perforce employs to make Helix Core the fastest VCS server on the planet. We’ve improved server resilience under load to support a greater number of simultaneous requests.

What's New in Core 2017.1?

DOWNLOAD PDF FULL RELEASE DETAILS

Faster File Transfers. Much Faster.

Transfer files over high latency networks up to 16 times faster than in 2016.2, even in federated deployments of Helix Core. See similar performance improvements for any communication between any server type — replicas, edge servers, commit servers, and proxies.

Support Git at Scale for Distributed Teams and CI

Helix Versioning Engine now supports Git at scale for distributed teams and multiple repos. Helix4Git speeds up builds by 40 to 80 percent and reduces storage by up to 18 percent with different mirroring options. For specifics, see Helix4Git.

Change Your File's Name or Location in a Single Command

Gone are the days when a “p4 move” command would require you to perform a “p4 edit” first. The 2017.1 release allows you to change the name or location of your files with a single command.

Identify and Sync Code Changes across Multiple DVCS Servers

Commands like “p4 files” and “p4 sync” now support using changelist identity so that it’s faster and easier to search, browse, and take actions on code changes that are shared across multiple Helix DVCS servers with different datasets.

Protect Your Servers from Outdated TLS Versions

Outdated cryptographic protocols compromise communications security over a computer network. This release enables greater flexibility to achieve tighter security by allowing admins to specify the minimum and maximum allowed TLS versions, preventing clients using other versions from connecting.

Filter and Search Remote Specs by Name

Find a remote specification of a central DVCS server faster so you can get up and running with something that works for you.

What's New in Core 2016.2?

Download PDF FULL RELEASE DETAILS

Submit Build Artifacts from Partitioned Clients

We gave you read-only clients, and you wanted more. We listened. Now you can submit build artifacts from partitioned clients to Helix while keeping new workspaces light and easily disposable for all your DevOps needs.

Turn Back Without Destroying History

Everybody makes mistakes. Easily undo an entire change list in a single operation, while retaining audit trails for enhanced security and compliance. Helix forgives, but doesn’t forget.

Delegate Permissions

Expand your circle of trust and delegate permissions over a specific depot or path to a specific group or user so you don’t have to carry the weight of system administration on your shoulders.

What's New in Core 2016.1?

Increase Collaboration Efficiency Among Distributed Team Members

Speed up your DVCS collaboration workflow. Now you can work locally, propose a change, create a new shelf for it, and push it to a remote server for a quick review before submitting it to the mainline. It’s faster and takes up less space in storage.

Ditto Mapping for Greater Flexibility

Stop jumping through hoops to make workspace mapping comply with your needs. Ditto mapping grants programmers the freedom to work on blocks of complex code individually, working from shared libraries without months of build-failure frustration.

Customize Your P4 Aliases

Unleash your inner James Bond and give your most-used commands p4 aliases that make logical sense to you. Or, go into stealth mode and string several p4 commands together to trigger behind-the-scenes processes that simplify workflows.

Boost Automation with 'p4 reshelve'

Tool builders will love boosting automation in Helix with the p4 reshelve command.